AI usage rarely fails all at once. It creeps.

A new AI feature ships. Adoption grows. Credits flow. Then one day, customers complained about usage spikes in a way nobody expected.

Suddenly, engineering has to explain.

- How did one user burn through most of the monthly budget for their account?

- Why did this request get blocked while another one went through?

- Who is supposed to stop runaway usage before it turns into outages, or an uncomfortable conversation with a customer?

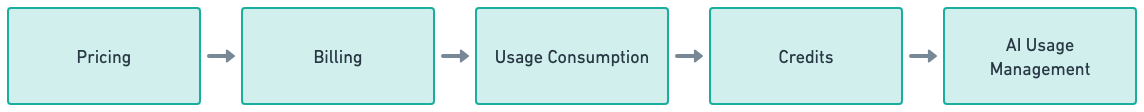

Pricing is already set. Billing already works. But enterprise customers want more than reports. They want control. Limits, allocations, budgets for predictable behavior as usage scales.

This is the engineering problem that appears after AI reaches production. And it is not a billing problem.

This post explains why usage management requires a dedicated control layer, and why most systems only discover that need once it is already painful.

The problem shows up in production

When AI usage starts creeping, it rarely triggers an immediate failure. Instead, the system drifts into a state where behavior becomes hard to predict.

Limits exist, but it is unclear which ones apply. Credits exist, but ownership is fuzzy. Budgets exist, but enforcement happens too late to matter. Engineering teams start seeing symptoms before they see a root cause.

Usage spikes that cannot be explained by a single user or request. Inconsistent blocking where similar calls produce different outcomes. Manual overrides to unblock customers, followed by cleanup later. None of this feels like a pricing problem. It feels like the system is missing a decision point.

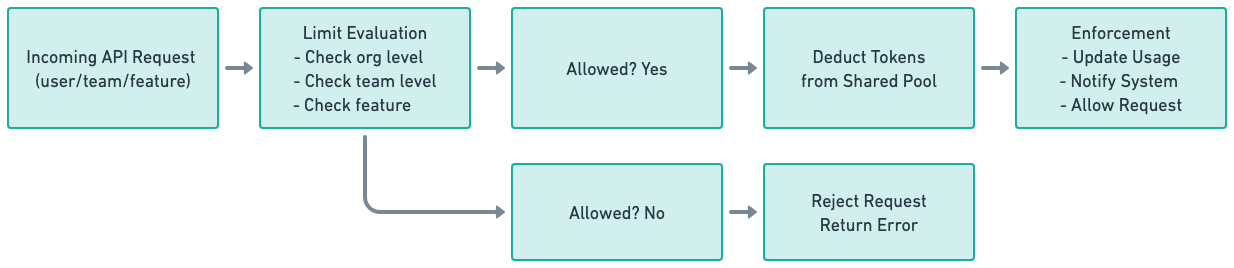

But why? AI driven workloads change the shape of consumption. Requests are bursty, and often generated by other systems. A single action can fan out into many operations, each consuming from shared resources. Under load, multiple requests can hit the same limits at the same time.

At that point, usage management is no longer about tracking what happened. It is about deciding what is allowed to happen. And that decision has to be made while the system is running.

Why billing systems cannot enforce usage

This is where many teams hit a hard boundary.

Billing systems are designed to process usage after execution. They collect events, aggregate consumption, and produce accurate financial outcomes. They are intentionally decoupled from runtime behavior.

Usage enforcement, on the other hand, must sit directly on the execution path. It needs request context, low latency, and deterministic behavior under concurrency. It needs to answer a yes or no question in milliseconds, not reconcile numbers later.

When enforcement is pushed into billing or reporting systems, engineering teams compensate elsewhere. Guards get added to services. Limits are duplicated. Logic drifts. Over time, control becomes fragmented and brittle.

The problem is not misuse of billing systems. It is that they are solving a fundamentally different problem. And the solution has to exist as a first class system, designed from the start to sit in front of execution, not behind it.

Limits, allocations and budgeting at enterprise scale

Once usage becomes something the system must actively decide on, limits stop being simple counters. This this multi-dimensional hard-limit example for API requests usage-based feature:

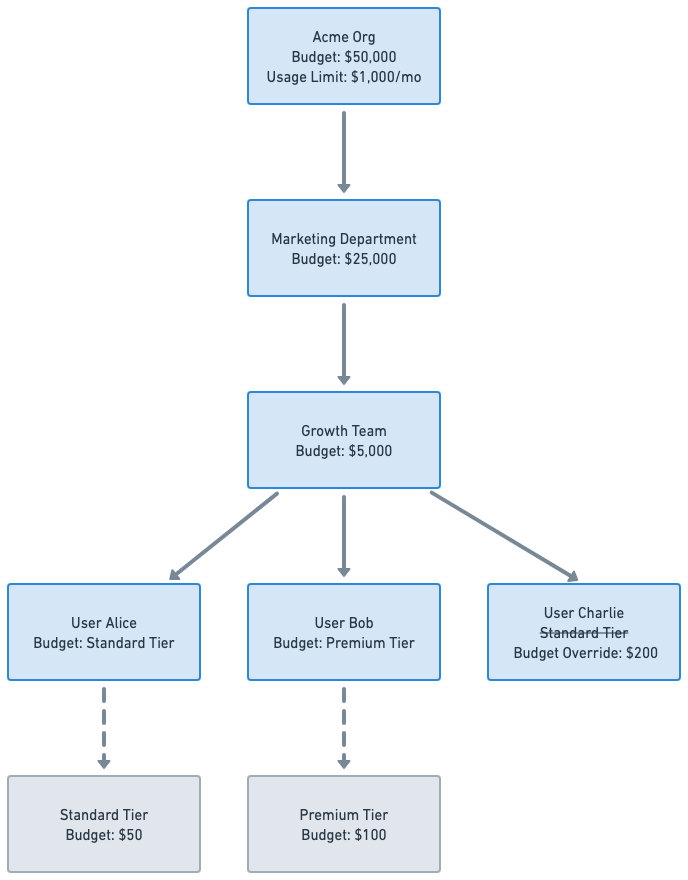

Budgets and limits are defined across organizational structure: teams, business units, environments, and sometimes regions. All of these often draw from the same underlying pool of credits: for example, a business unit may have 1 million AI tokens or $50,000 budget allocated, subdivided across 10 departments and their employees, each with its own seat type specific limits.

Provisioning customers and allocations needs to take into consideration those additional dimensions that weren't needed before. Manage customer’s resource types, list them by demand, and allocate them after provisioning the customer.

Resource Types:

{

"resourceTypes": [

{

"id": "org",

"name": "Organization",

"properties": {

"displayName": { "type": "STRING", "required": true }

}

},

{

"id": "dept",

...

},

{

"id": "team",

...

},

{

"id": "user",

"name": "User",

"parentResourceTypeId": "team",

"properties": {

"email": { "type": "STRING", "required": false },

"tier": { "type": "ENUM", "values": ["STANDARD", "PREMIUM"], "required": true },

}

}

]

}

List of resources:

{

"resources": [

{

"id": "org-acme",

"resourceTypeId": "org",

"customerId": "cust-acme-inc",

"properties": {

"displayName": "Acme Org"

}

},

{

"id": "dept-marketing",

...

},

{

"id": "team-growth",

...

},

{

"id": "user-alice",

"resourceTypeId": "user",

"parentResourceId": "team-growth",

"customerId": "cust-acme-inc",

"properties": {

"email": "alice@acme.com",

"tier": "STANDARD"

}

},

{

"id": "user-bob",

...

}

]

}

Resources level allocations:

{

"allocations": [

{

"resourceId": "org-acme",

"creditCurrency": "ai-tokens",

"customerId": "cust-acme-inc",

"limit": 50000,

"enforcementType": "HARD"

},

{

"resourceId": "dept-marketing",

...

},

{

"resourceId": "team-growth",

...

},

{

"resourceId": "user-bob",

"creditCurrency": "ai-tokens",

"customerId": "cust-acme-inc",

"limit": 200

}

]

}

From an engineering perspective, this creates a multi-dimensional allocation problem. A single request may touch multiple dimensions simultaneously: the user making the request, the team or org they belong to, the feature or product being invoked, and the shared credit pool backing all of it.

Each of these dimensions may have its own limit, while still contributing to shared consumption. At runtime, the system has to answer a simple question: is this request allowed? But answering it correctly requires resolving all applicable limits consistently, under concurrency, and with low latency. A simple check would look like this:

// 1. Check if user has sufficient credits

const entitlement = await stigg.getCreditEntitlement({

resourceIds: ['user-01', 'emea', 'engineering'], # dimensions

creditCurrency: 'ai-tokens',

options: {

requestedUsage: estimatedCredits, // Pre-check this amount for expensive operations

},

});

// 2. Handle access denial

if (!entitlement.hasAccess) {

return; entitlement.accessDeniedReason; // Return deny reason to the user

}

// 3. Has enough credits, execute the AI operation

// ...

Even at a modest scale, say 5 products, 10 features, and 50 teams, the number of possible limit combinations exceeds 2,500. At a larger enterprise scale, with hundreds of teams and dozens of features, tens of thousands of combinations are possible. To illustrate the scale challenge: consider an enterprise running 20 teams, each making 1,000 requests per minute across 10 features. That’s 200,000 requests per minute, all of which must be checked against multiple limits in near real time.

This is not just a modeling problem. It is a concurrency problem.

Consumption under load: concurrency, consistency, and correctness

Each entitlements check is then followed by actual usage:

// 1. Execute the AI operation

// ...

// 2. Emit an event with resource attribution

await stigg.reportEvent({

customerId: 'test-customer-id',

eventName: 'email_enrichment',

idempotencyKey: '227c1b73-883a-...',

dimensions: {

user_id: 'user-01',

region: 'emea',

org: 'engineering'

},

timestamp: '2025-10-26T15:01:55.768Z'

});

Under real workloads, multiple requests may attempt to consume from the same pool at the same time. If enforcement is not consistent, one of two things happens: (1) limits are exceeded silently, (2) legitimate requests are blocked incorrectly. Both outcomes erode trust.

Enforcing limits correctly in this scenario is not just about computation, it is about consistency, concurrency, and reliability under load.

Traditional approaches suggested by billing or reporting systems do not hold up here:

- Webhooks introduce latency and failure points. Each request triggers an external call that can fail, timeout, or queue. Even a small failure rate results in incorrect enforcement across thousands of concurrent requests.

- Polling creates stale data or network overload. To maintain accurate limits, polling intervals would need to be sub-second, which floods infrastructure and still cannot guarantee correctness during bursts.

The challenge is not just defining limits. It is resolving consumption across organizational structure in a deterministic, consistent way, while accounting for parallel requests, shared pools, and complex allocations in low latency.

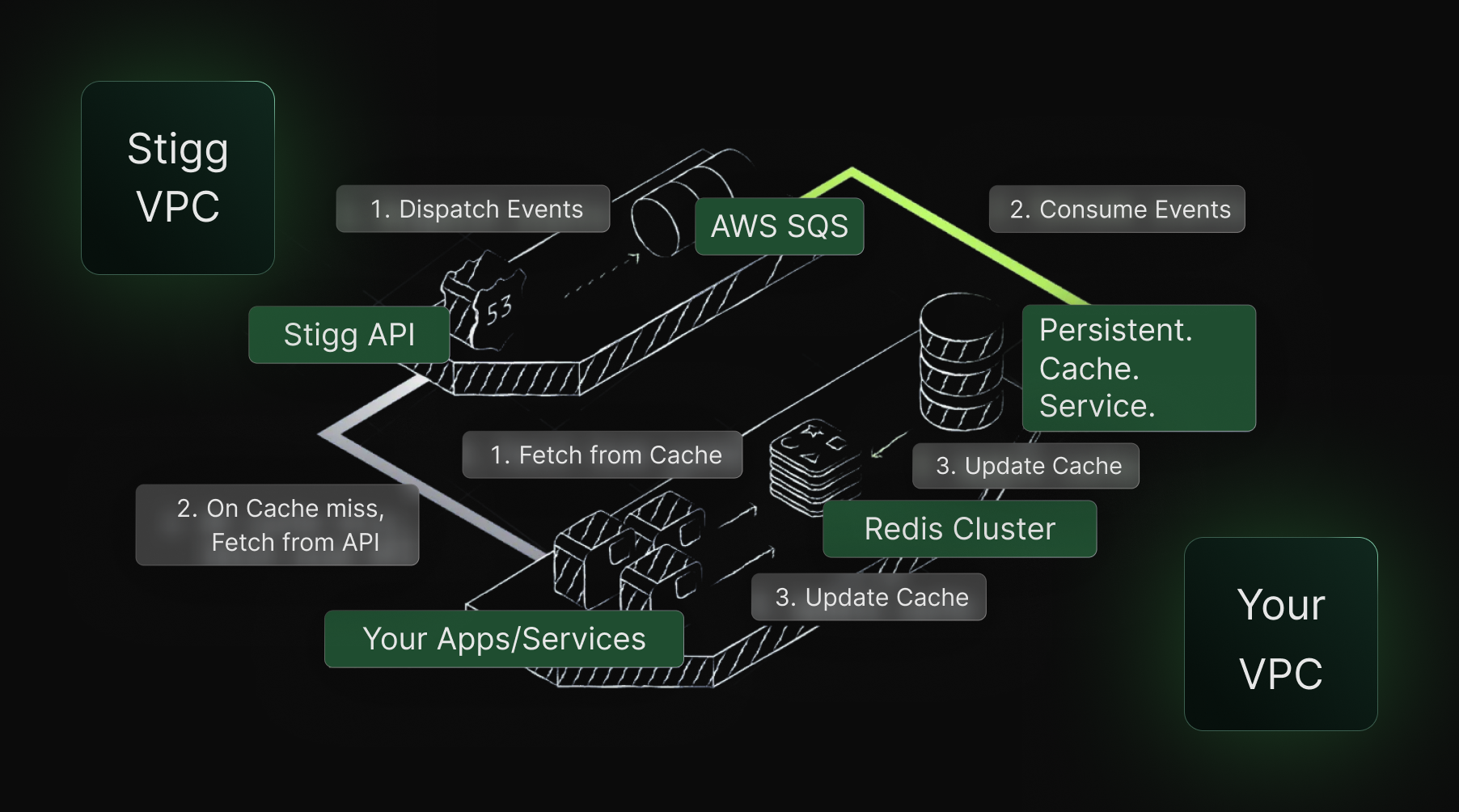

At this point, enforcement has to live as close to execution as possible. For that we build a solution to be as close as possible to the source, avoiding any other walkaround.

Read more on Stigg’s Sidecar and Sidecar SDK solution.

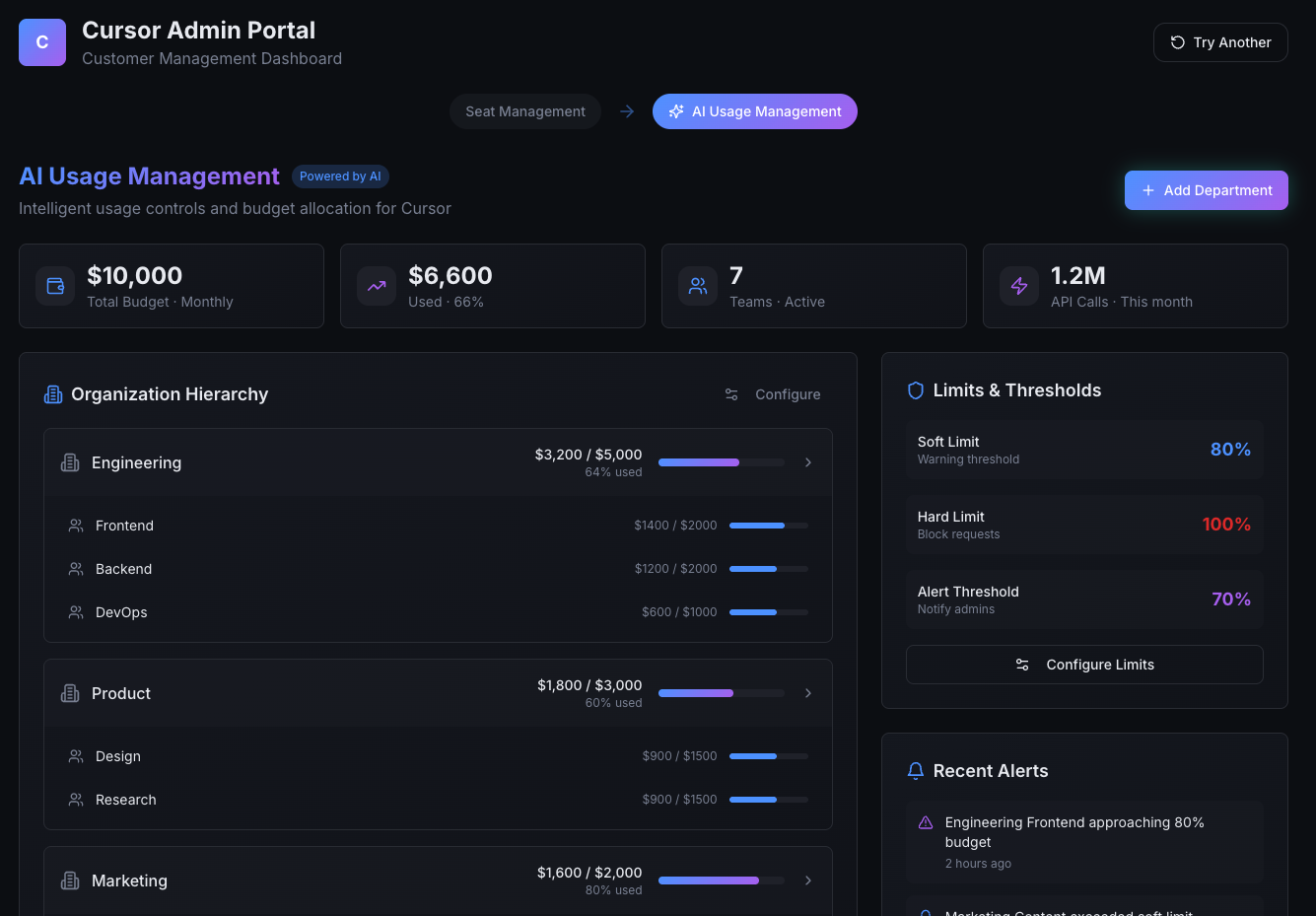

Management and visibility that matches enterprise structure

Control without visibility is not control.

Enterprise customers expect to manage usage the same way they manage their organization.

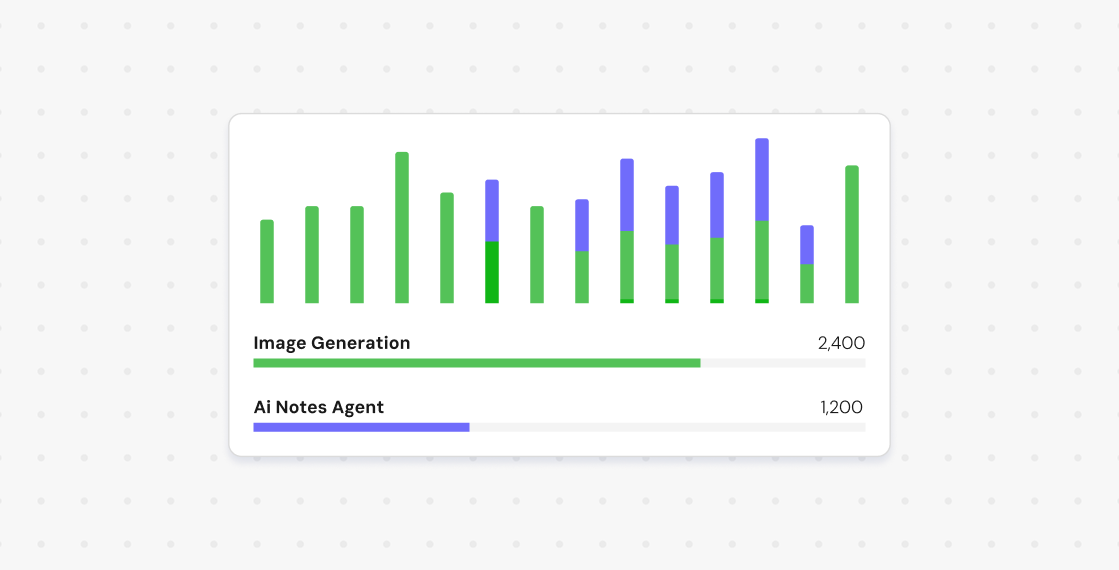

They want to see consumption, limits, and remaining budgets at the levels where decisions are made, from individual users to full organizational units. That includes individual users, teams and departments, environments and business units, and overall organizational budgets.

This visibility is not just for reporting. It is how enterprises validate that usage is behaving as expected. If customers cannot clearly explain their own usage, they will not trust the system, even if billing is technically correct.

From an engineering standpoint, this means usage data must be explainable, not just accurate. Consumption must map cleanly back to organizational structure and policies.

Use Stigg’s Widgets capability to take it of your mind:

import { UsageManagementPortal } from '@stigg/react-sdk';

export default function AIGovernancePage() {

const { user, organization } = useCurrentUser();

const isAdmin = user.role === 'admin';

return (

<div className="admin-page">

<StiggProvider apiKey="CLIENT_API_KEY" customerId={organization.id}>

<UsageManagementPortal

rootResourceId={organization.id}

creditCurrency="ai-credits"

readOnly={!isAdmin}

/>

</StiggProvider>

</div>

);

}

Enforcement must happen as usage occurs

AI driven workloads are not polite. They are bursty, parallel, and often automated. By the time asynchronous systems observe usage, the moment to enforce has already passed. Effective usage management requires enforcement to happen in almost real time, on the critical path to execution.

This is where most teams underestimate the problem.

Balancing latency, correctness, and scale is a hard system challenge. It requires careful tradeoffs around consistency, failure handling, and concurrency. Traditional billing and reporting systems are not designed for this role.

For a deeper dive into the practical tradeoffs involved in enforcing credits and usage at scale, see this writeup on building AI credits and why enforcing them correctly was harder than expected.

The missing control layer

Most systems lack a dedicated usage control layer.

This layer sits between execution and billing. It evaluates limits, budgets, and policies in real time and turns pricing intent into runtime behavior.

Without it, usage management becomes reactive. Enforcement happens too late. Logic gets duplicated across services. Control becomes fragmented and brittle.

A proper control layer centralizes these decisions and scales with AI driven workloads, rather than fighting them.

Why usage management replaces user management

Traditional SaaS systems were controlled through users. Access was granted or denied based on identity and roles. Seats were the primary unit of control.

AI changes this model.

When software is consumed by algorithms instead of people, identity alone is no longer sufficient. What matters is not who is calling the system, but how much is being consumed, by which part of the organization, and under what constraints.

Usage management becomes the new control layer because it answers a different question:

what can be consumed right now, and should it be allowed?

This shift is explored further in When Your Customer Is an Algorithm, which explains why automated consumption breaks traditional pricing assumptions and pushes control into runtime systems.

Without this shift, AI driven products cannot scale safely. Enterprises are left with unpredictability instead of control, and engineering teams are left compensating for missing infrastructure.